What is a Kubernetes Cluster?

At its core, a Kubernetes cluster is a group of machines working together to manage and run containerized applications. These machines, called nodes, can be either physical servers or virtual machines, creating a highly scalable and flexible platform. A Kubernetes cluster includes two essential components:

- Master Node: Acting as the “brain” of the Kubernetes cluster, the master node oversees and manages the overall cluster state. It handles scheduling, commands, and container deployments, ensuring the smooth operation of applications.

- Worker Nodes: Known as the cluster’s “workhorses,” worker nodes execute the actual containerized applications. They receive directives from the master node and perform the processing power needed for running containers.

A Kubernetes cluster provides a streamlined platform for deploying containerized applications, automating the complexities of managing multiple containers. Leveraging a Kubernetes cluster empowers developers with:

- Scalability: Effortlessly scale applications up or down to meet changing demand.

- High Availability: Maintain application accessibility, even when nodes experience failures.

- Flexibility: Deploy applications across on-premise, cloud, and hybrid environments.

Key Components Of a Kubernetes Cluster

A Kubernetes cluster comprises several essential components working together to orchestrate containerized applications. Referencing the image given above, we can see how each component fits within the control and data planes.

1. Control Plane

The control plane, shown at the top of the image, manages the cluster’s configuration and deployment:

- API Server: As illustrated, the API Server serves as the communication hub, managing incoming requests. For security, strict Kubernetes Security Posture Management (KSPM) is critical to handle user interactions safely.

- Scheduler: The Scheduler, visible in the image, assigns resources by matching pods to nodes based on available capacity, ensuring balanced workloads across the cluster.

- Controller Manager: The Controller Manager oversees controllers that monitor and adjust deployment states, maintaining a stable configuration.

- etcd: Located as the data storage in the image, etcd stores vital cluster state data. Since it contains sensitive configurations, encrypting etcd and applying KSPM practices are essential to avoid data leaks.

2. Data Plane

The data plane (bottom section of the image) is made up of worker nodes that run applications:

- kubelet: As seen on each worker node in the image, kubelet manages pod lifecycles, receiving instructions from the control plane for containerized application operations.

- Container Runtime: Each worker node has a container runtime (e.g., Docker or containerd), as represented in the image. Choosing a secure runtime and keeping it updated is crucial for container security.

- Kube-proxy: Also shown within each worker node, Kube-proxy manages inter-pod communication, helping regulate network traffic and enhancing cluster firewall security.

So, where is the greatest need for security within these components?

Security and Kubernetes Security Posture Management (KSPM) are critical in areas like the API Server and etc. as shown in the image. However, monitoring configurations, detecting vulnerabilities, and applying security measures across the cluster ensures robust protection for applications and sensitive data.

How Does a Kubernetes Cluster Work?

With an understanding of the key components, let’s explore how a Kubernetes cluster operates to orchestrate containerized applications. Although Kubernetes has intricate details, the main workflow is straightforward.

- Defining the Desired State: Developers use a YAML file to specify the desired state of an application’s workload, including details such as container images, pod configuration, and resource requirements.

- Deploying Container Images: Kubernetes pulls container images from a container registry (e.g., Docker Hub), deploying them across nodes in the cluster. It allocates network and compute resources automatically, ensuring efficient distribution without manual intervention.

- Maintaining the Desired State: If any changes disrupt the desired state (such as a pod failure), the control plane continuously monitors and restores it, automatically restarting unhealthy pods. This process is part of Kubernetes’ self-healing mechanism.

- Rolling Updates and Scaling: Kubernetes can be configured to perform rolling updates with zero downtime, gradually replacing old versions of applications with new ones. Also, it can auto-scale based on demand, adapting to workload fluctuations for optimal resource utilization.

By automating tasks such as deployment, scaling, and updates, a Kubernetes cluster simplifies container orchestration, allowing teams to focus on application development without needing to manage the infrastructure manually.

How to Create a Kubernetes Cluster?

Now that we understand the inner workings of a Kubernetes cluster, let’s explore how you can build your own.

There are several approaches to creating a Kubernetes cluster, catering to different needs and experience levels. Here’s a breakdown of some popular options:

- Cloud-Based Kubernetes Services: If you’re deploying on the cloud, platforms like Azure Kubernetes Service (AKS) and Amazon Elastic Kubernetes Service (EKS) simplify cluster creation. Azure’s wizard-based setup streamlines K8s deployment, while AWS’s EKS abstracts much of the setup, letting you focus on configuration and scaling.

- Minikube for Local Environments: For those new to Kubernetes or looking to experiment, Minikube is an excellent option. By installing Minikube alongside kubectl (the command-line tool for Kubernetes), you can start a local cluster with just the minikube start command from your terminal. This local setup is ideal for developers and engineers testing applications on their machines before deploying to larger environments.

With options tailored for both cloud deployments and local testing, Kubernetes clusters are accessible to beginners and experienced users alike, making it easy to start building containerized applications.

Benefits of Creating Kubernetes Clusters

At this point, the inner workings of a Kubernetes cluster and its orchestration capabilities should be clear. But why exactly would you choose to create a Kubernetes cluster?

The key benefit lies in its ability to abstract away the complexity of container orchestration and resource management. Kubernetes takes care of the heavy lifting, allowing developers to focus on building and delivering applications.

Here’s a breakdown of some specific benefits offered by Kubernetes clusters:

1. Scalability Made Simple

Kubernetes takes the hassle out of scaling your applications. Imagine your app suddenly getting a spike in users—no need to panic! With Kubernetes, you can automatically increase the number of running containers to handle the load, thanks to its smart scaling features.

It keeps an eye on how much resources your app is using and adjusts the number of active pods on the fly. So whether you’re experiencing a rush during peak hours or winding down during quieter times, Kubernetes has your back.

2. Reliability You Can Count On

When it comes to keeping your applications up and running, Kubernetes shines. It’s built with reliability in mind, meaning it can bounce back from failures without you lifting a finger. If a container crashes, Kubernetes will replace it automatically.

Plus, it can spread critical components across different nodes, reducing the chances of a single point of failure. This way, even if something goes wrong, your users won’t notice a thing.

3. Smart Resource Management

Kubernetes is all about making the most of what you have. It manages resources like CPU and memory in a way that minimizes waste. Each application gets just what it needs, and nothing goes to waste.

You can even set limits on how much resource each app can use, which helps keep everything running smoothly and avoids any one app hogging all the power. This means better performance and cost savings, especially in environments where demand can change rapidly.

4. Flexibility to Adapt

One of the best things about Kubernetes is how adaptable it is. You’re not tied to a specific cloud provider or stuck with just one deployment option. Want to run your applications in your own data center? No problem.

Need to shift to the cloud? Easy! Kubernetes lets you move your workloads seamlessly between on-premises and various public cloud environments. This flexibility means you can choose what works best for your business without feeling locked in.

Put together, these benefits translate to significant advantages for containerized application development and deployment:

- Increased Reliability: Self-healing and automated rollouts lead to more reliable and resilient applications with minimal downtime.

- Improved Scalability: Kubernetes clusters effortlessly scale your applications up or down based on changing demands, ensuring optimal resource utilization.

- Faster Development Cycles: Automated deployments and rollbacks enable faster development cycles and quicker time to market for your applications.

- Simplified Management: Kubernetes abstracts away the complexities of container management, freeing developers to focus on application logic and innovation.

Security Risks in Kubernetes Clusters

While Kubernetes provides a wealth of benefits, it also brings along a set of security challenges that users need to be aware of. Let’s dive into some of the most pressing risks:

Vulnerabilities Within Containers

Containers aren’t immune to security issues. They can harbor vulnerabilities that, if exploited, threaten not just the containers themselves but also the nodes and the overall cluster. Problems often arise from using outdated container images or pulling in third-party images without adequate security checks. Ensuring that you’re using up-to-date, trusted images is crucial for maintaining security.

Misconfigurations

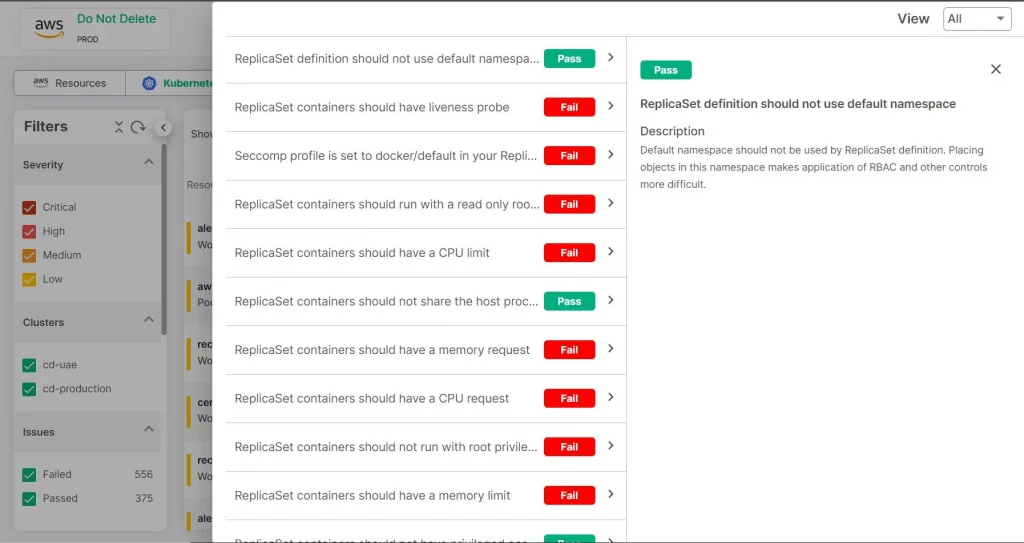

One of the biggest challenges in Kubernetes security is misconfigurations. These mistakes can lead to unauthorized access, data leaks, and various vulnerabilities. Relying on default settings or using publicly available Infrastructure as Code (IaC) files for sensitive configurations—like those related to authentication—can leave your Kubernetes API and applications exposed to potential threats.

Risks from the Network

Kubernetes clusters can be prime targets for network-based attacks. To guard against these threats, effective network segmentation and robust policies are essential. By dividing the network into smaller, isolated segments, you can minimize the attack surface. Coupled with clear network policies that dictate how pods interact with each other and with external services, you create a fortified communication environment that enhances security.

Handling Secrets Securely

Handling sensitive information, like passwords and API keys, is a critical aspect of security in Kubernetes. If secrets aren’t managed correctly, the consequences can be dire. Kubernetes does offer tools like Secrets and ConfigMaps to help manage this sensitive data securely. However, it’s vital to implement encryption and strict access controls to ensure that only authorized users can access these secrets.

Data Security Risks

In a Kubernetes environment, safeguarding your data is paramount. Implementing strong data encryption practices ensures that your data remains secure both in transit and at rest, adding an essential layer of protection against potential breaches.

Runtime Security Flaws

Even with the best configurations and well-protected containers, runtime security is still a major concern. During the operation of your cluster, attackers might exploit vulnerabilities to create rogue pods or alter running containers. Continuous monitoring, anomaly detection, and the use of runtime security tools are essential to quickly identify and address these threats before they escalate.

Access Control Challenges

Weak access controls can result in unauthorized actions and data breaches. Leveraging Kubernetes-native Role-Based Access Control (RBAC) helps ensure that users and services only have the permissions they truly need, providing a layer of security that can prevent misuse.

Protecting Your Kubernetes Environment with CloudDefense.AI

Now that we’ve talked about the potential security flaws in Kubernetes, let’s dive into a solution that can help you prevent those issues before they even arise. Given that Kubernetes is central to modern cloud-native application infrastructure, protecting it is essential.

That’s where CloudDefense.AI steps in. We offer a robust cloud security platform specifically designed to boost the security posture of your Kubernetes clusters. Here’s how we can help:

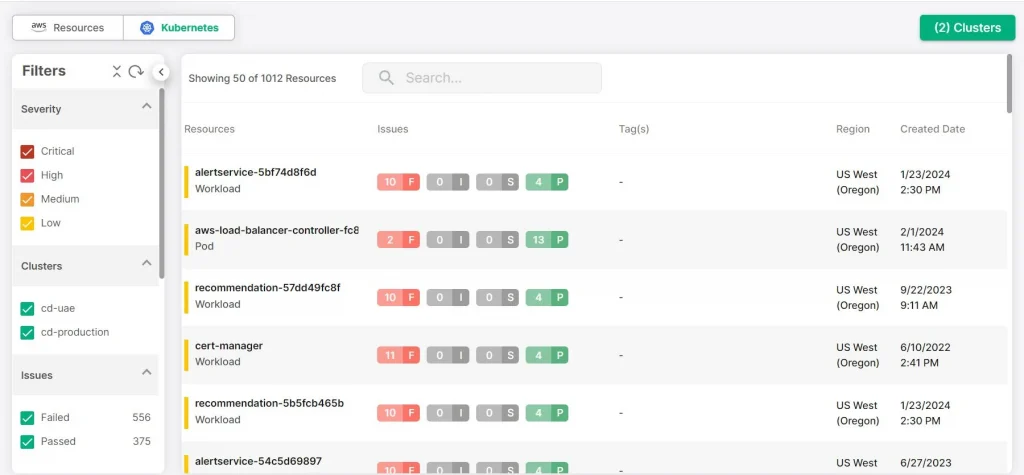

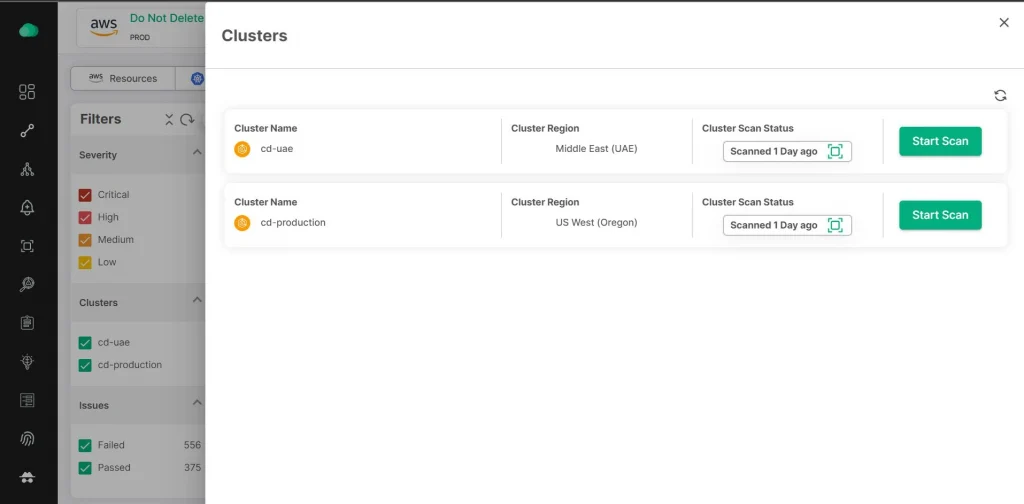

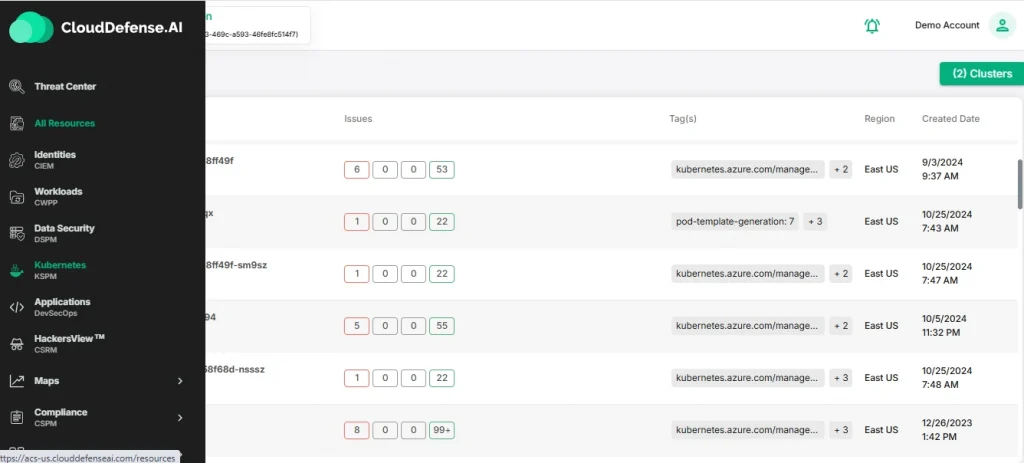

In-Depth Visibility

With CloudDefense.AI, you get a clear view of your Kubernetes configurations and how things are running. This level of visibility helps you pinpoint vulnerabilities before they turn into real headaches.

Real Time Monitoring

Think of CloudDefense.AI as your 24/7 security guard. It continuously monitors your clusters, ready to detect and respond to any security threats that pop up. This means you can rest easy, knowing that someone is always watching over your environment.

Smart Threat Detection

Thanks to advanced Artificial Intelligence and machine learning, CloudDefense.AI can spot potential risks that you might miss. Its intelligent threat detection capabilities help you stay one step ahead of any would-be attackers.

Fixing Misconfigurations

Misconfigurations can be a sneaky source of security issues. CloudDefense.AI scans your setup to catch these mistakes and gives you practical advice on how to fix them. This proactive approach keeps your environment secure from the start.

Runtime Protection

Security doesn’t stop when your applications go live. CloudDefense.AI keeps an eye on what’s happening in real-time, monitoring for any suspicious activity or potential breaches. This ensures your clusters remain safe while they’re up and running.

Easy Compliance

With this KSPM solution, you can define your custom security policies and enforce rules that cater to your organization’s requirements. Setting custom policies, allows you to maintain a comprehensive approach towards the protection of the Kubernetes environment.

Conclusion

We believe this article on Kubernetes clusters has hopefully shed light on the immense potential they offer for managing containerized applications. But remember, though it offers great power, it also comes with great responsibility. Securing your Kubernetes cluster is crucial, and a multi-layered approach is key. Ready to see the power of Kubernetes in action and experience the security benefits of CloudDefense.AI firsthand? Don’t wait! Book your free demo today and discover how this dynamic duo can revolutionize your containerized application development and deployment.