The Agentic AI system has brought an AI revolution in the industry. From being a buzzword to becoming a vital AI agent in the cloud environment, Agentic AI has made a huge leap. How is it helping organizations? The autonomous capability to make decisions, perform an action, and learn from LLMs, allows organizations to perform complex tasks.

Organizations are integrating the new AI technology in their cloud environment for various tasks. While these integrations offer numerous benefits, Agentic AI’s characteristics also introduce numerous cloud threats.

The interconnectivity, autonomy, and learning capabilities of Agentic AI have become a point of exploitation for modern attackers. We will highlight 5 common cloud threats exploiting Agentic AI systems that you need to watch out for before integrating them.

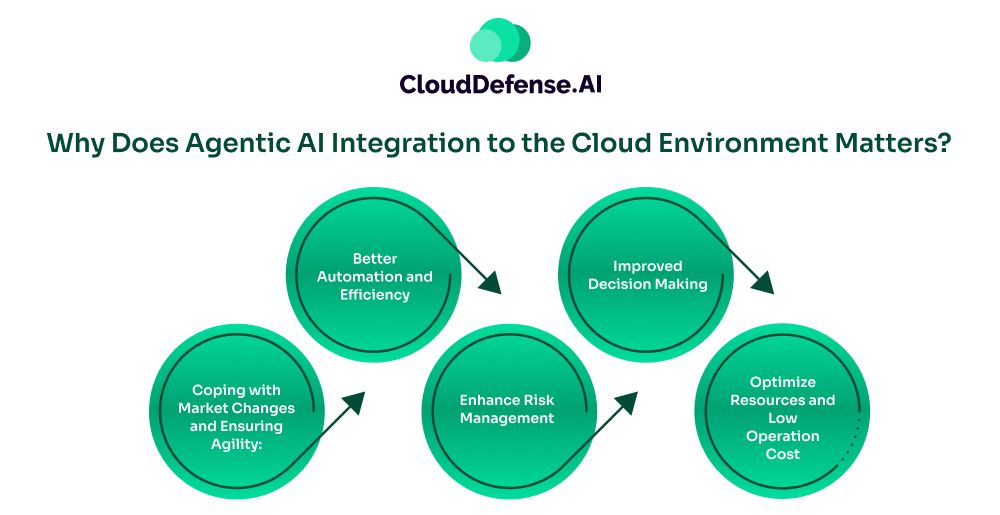

Why Does Agentic AI Integration to the Cloud Environment Matters?

In today’s complex cloud environment, traditional AI tools simply don’t cut it. Organizations are integrating Agentic AI as it can bring a major improvement in operation, security, and development. Here are the key reasons:

- Better Automation and Efficiency: Integrating Agentic AI into the cloud environments enhances the automation of different tasks by a margin. Its real-time reasoning capability and autonomous decision-making can streamline different decision-intensive workflows. The integration also improves operational efficiency due to its independent working capability. Thus, cloud experts can focus on creative tasks while it optimizes repetitive processes.

- Improved Decision Making: With Agentic AI integration in their cloud infrastructure, organizations can access actionable insights. It can analyze complex datasets and understand the context, helping in better decision-making. It leverages LLM- the reasoning engine, to analyze specific tasks and take actions. The improved decision-making makings come useful for time-sensitive tasks like cloud resource optimization.

- Coping with Market Changes and Ensuring Agility: Agentic AI once integrated constantly learns through real-time data streams and adapts to evolving contexts. By utilizing real-time data insights, it helps organization to adjust operation strategies and make decisions. The autonomous decision making capability allows the system to react to market changes, ensuring business agility.

- Enhance Risk Management: Agentic AI can be integrated with various cloud security tools to continuously monitor the cloud and hunt for threats. It can autonomously understand indications of threats and respond accordingly. By learning the surrounding context, AI agents can make changes to the cloud security policies and improve security posture.

- Optimize Resources and Low Operation Cost: Organizations are rapidly involving Agentic AI as it can intelligently allocate resources in the cloud infrastructure. It not only helps in better resource optimization but also reduces operational costs. For cloud end devices, it can understand its efficiency and health, and schedule maintenance accordingly. It saves organizations from additional operational costs.

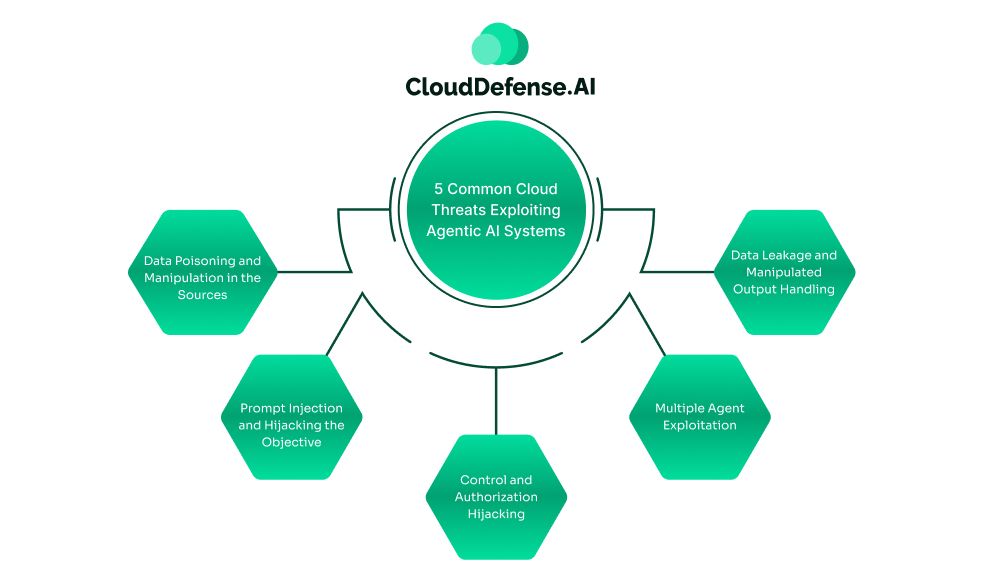

5 Common Cloud Threats Exploiting Agentic AI Systems

The agentic AI system is a highly capable AI agent that organizations are harnessing by integrating it with their cloud environment. However, this integration gives rise to various cloud threats that traditional approaches can’t solve. Here are 5 common cloud threats:

Data Poisoning and Manipulation in the Sources

One of the biggest cloud threats to Agentic AI is data poisoning. Agentic AI utilizes different sources like cloud-based data sources or training datasets to constantly train and make decisions. In many instances, Agentic AI accesses public API and databases for fine-tuning its knowledge base. Malicious attackers launch data injection attacks on these data sources, especially the sources stored in public cloud environments.

The attackers inject the datasets with malicious or biased data, hampering the learning process of the Agentic AI. A corrupted or manipulated knowledge base can lead to the AI agent making incorrect decisions, or delivering biased logic. A security agent based on Agentic AI can be tricked to overlook certain threats.

Prompt Injection and Hijacking the Objective

Agentic AI often aligns its learning based on the task or objective provided through natural language prompts, cloud functions, or APIs. The input sources become a target for attackers where they launch prompt injection or hijack objectives to divert the agent’s behavior.

Attackers utilize prompt injection to send malicious prompts or instructions in the user’s input to manipulate the agent or hijack its intent. It redirects the Agentic AI to perform manipulated tasks or behave erratically. An attacker also makes an effort to hijack the objective of the Agentic AI by feeding it with crafted inputs. As a result, it slowly shifts the agent’s objective to something malicious and ultimately fulfills the attacker’s main intent.

Control and Authorization Hijacking

Control hijacking and privilege escalation are some serious cloud threats that can lead to serious consequences. Agentic AI in the cloud is given different levels of access control on resources and systems as they work autonomously. By exploiting vulnerabilities on the Agentic AI’s platform or by gaining access to a user’s account, attackers can gain control over the AI agents.

They can alter the decisions and actions of these AI agents to perform malicious tasks or launch a cyber attack. In addition, Agentic AI is also vulnerable to privilege escalation attacks. Attackers can exploit vulnerabilities in access control or IAM misconfiguration to enable the agent to access resources beyond their operational needs. Attackers often hijack critical resources to launch malware or deploy VMs for malicious tasks.

Multiple Agent Exploitation

In broad cloud environments, the organization often utilizes multiple Agentic AI systems to achieve specific objectives. Attackers exploit the communication between the AI agents along with their dependence to fulfill their malicious intent. It is a serious cloud threat where malicious actors exploit less-privileged or low-level agents in the cloud to gain access to highly privileged AI agents.

The AI agents can be manipulated to perform intended malicious tasks by using their elevated privileges. In certain instances, the attacker can trick the less-critical agents into requesting a specific agent to perform a malicious action. In multi-agentic AI systems where goals aren’t defined, attackers can prompt their malicious objective and create a specific outcome.

Data Leakage and Manipulated Output Handling

Attackers exploit the vulnerabilities in the output handling of Agentic AI which leads to unintended exposure of the backend system of the cloud. The process through which Agentic AI synthesizes and outputs information also creates an attack vector. When the output of the Agentic AI is manipulated and they are used by other systems without sanitation, it leads to serious security flaws.

In this type of cloud threat, attackers can exploit vulnerabilities in the system to get control over the AI agent’s reasoning and ultimately reveal various sensitive data. The Agentic AI systems processing user’s interaction in the cloud has become a target. These agents are manipulated to share data across users, leading to the disclosure of customer details.

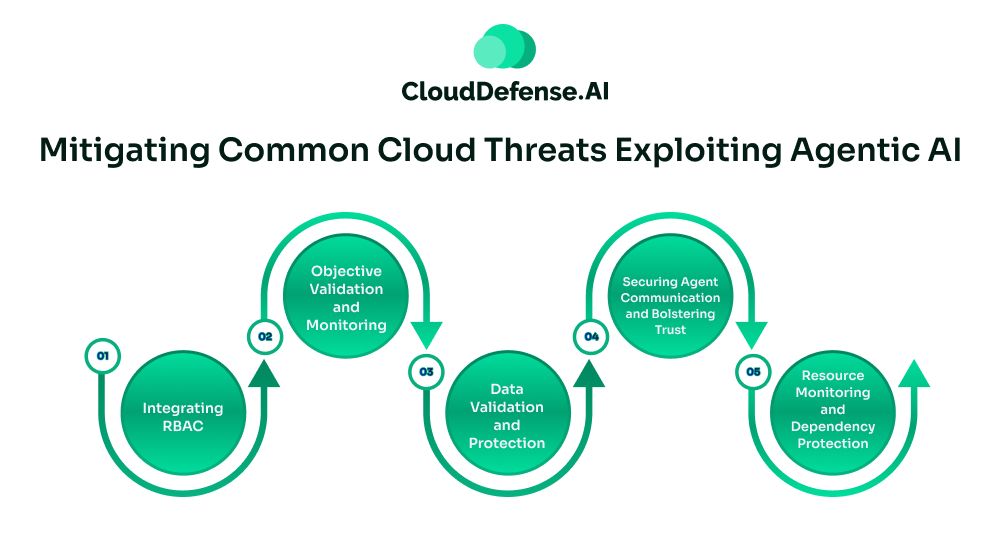

Mitigating Common Cloud Threats Exploiting Agentic AI

To mitigate common cloud threats, organizations can implement various preventive measures. Here are key strategies that you can implement:

- Integrating RBAC: An effective way to mitigate cloud threats like authorization hijacking is by integrating role-based access control. It defines the roles of the agents and their limitations to resources. Moreover, it provides agent roles with an expiry and when a specific task is achieved, the agent loses access.

- Objective Validation and Monitoring: The objective of agents should be validated when the agent starts behaving erratically. It should be isolated before it starts affecting other AI agents. Along with validation, the agent’s goal should be monitored and its execution of outputs.

- Data Validation and Protection: Agentic AI is constantly fine-tuning itself by sourcing data from different sources. Through source verification, the data integrity should be thoroughly validated. Version control on the knowledge database should be implemented as it will ensure there is no manipulation in the core knowledge base.

- Securing Agent Communication and Bolstering Trust: All agent-to-agent communication must be encrypted. The instructions or messages must be validated before they are executed. Zero trust principles can be implemented and interaction can be authenticated which will prevent the exploitation of multi-agent interaction.

- Resource Monitoring and Dependency Protection: Continuously monitoring all the resources and limiting their usage is an effective way to mitigate unauthorized resource usage. Load balancing can also be utilized to safeguard Agentic AI’s dependencies in the cloud.

Final Words

Preventing the Agentic AI system in your cloud environment becomes the next target of cyber attackers. Any cloud threat to any of your Agentic AI systems can lead to serious implications.

Build security strategies to prevent any attack vector and apply the mitigation policies to safeguard all the agents. Agentic AI systems in your cloud environment can be both beneficial and harmful. Your approach toward preventing the common cloud threats will decide your organization’s security posture.